Semantic Lidar Mapping

National Key Research Projects

The project has been transformed into an invention patent, and the patent has entered the substantive examination stage

This project uses YOLO to obtain the size, position and semantic information of objects in the environment in each camera frame, then uses ORB feature and IOU information of 2D Bounding Box between frames to track the objects. At the same time, the algorithm uses the Gmapping algorithm to obtain the pose of the robot and construct raster map.

After the system is started, the system will combine the historical 2D frame information corresponding to each object in the space and the movement information of the robot to simultaneously solve the 3D bounding frame corresponding to each object.

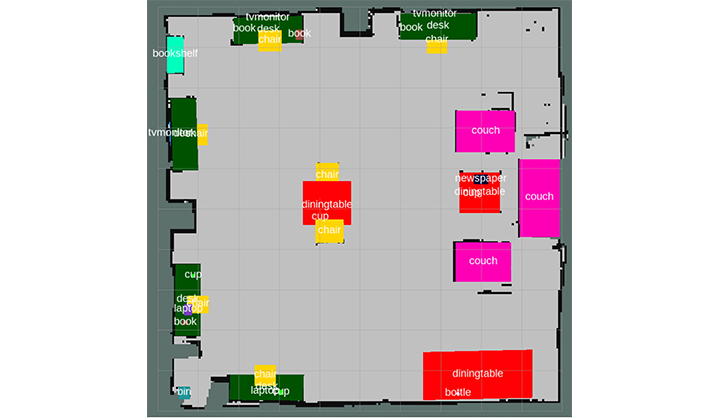

Finally, the 3D bounding box corresponding to each object is projected onto the raster map to generate a rough 2D semantic map.

The algorithm can run at a reasoning speed of 30HZ on Nvidia TX2, which can meet the task of robots for real-time perception of the environment. And the generated rough map can be fully adapted to the robot's path planning tasks with semantic targets.