Cloth stacking in a human-machine collaborative environment

Abstract

This design mainly realizes two functions:

1. The camera automatically recognizes the cloth randomly stacked on the table, and finishes the arrangement and stacking of the cloth autonomously.

2. By performing behavioral cloning of actions completed by humans, manipulating the robotic arm to reproduce the actions completed by humans, and storing the actions to realize automatic programming of the robotic arm stacking.

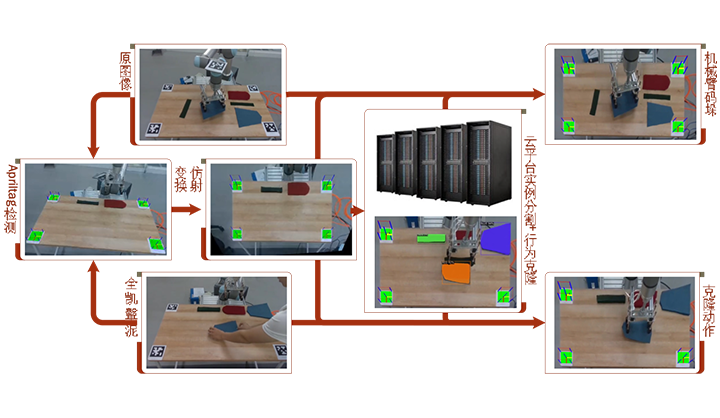

The overall flow chart of the work is shown in Figure 1.

Figure 1 Block diagram of the overall process

First, the camera acquires the image and uses affine transformation to obtain the cloth distribution map from the top view perspective; then, based on the top view, the BlendMask algorithm is used to obtain the segmentation map; then, the minimum bounding box algorithm will calculate the center point coordinates of each cloth (x, y) and the rotation angle are sent to the behavior recognition module to encode the execution process of human behavior, and finally output to the robotic arm to complete the motion reproduction, and use the completed grasping task.

Background

Cloud-based robotic arm fabric stacking based on behavioral cloning in a human-machine collaborative environment is mainly used in the textile industry.

In the textile production process, every time the textile task is changed, the robotic arm grasping process needs to be reprogrammed to adapt to different fabric stacking sequences and shapes. Reprogramming the robotic arm will consume a lot of manpower and time costs and slow down the production speed. In response to this problem, this paper designs a behavioral cloning algorithm based on image instance segmentation, which uses a robotic arm to imitate the process of stacking cloth by hand, and adapts to the environment to complete the automatic programming of newly added textile tasks. At the same time, this design uses Apriltag technology to fix the desktop area to a fixed area under the pixel coordinate system, so that the camera in the fixed hand-eye system of the robotic arm can move freely, meeting the needs of the textile industry for human-machine collaboration.

Content

This design divides the cloud-based robotic arm cloth stacking based on behavioral cloning in a human-machine collaborative environment into four tasks.

1. Transformation of the top view of the workbench area

In order to ensure that the movement of the camera will not affect the position of the recognized object. In this paper, the Apriltag tag is used to obtain the true position of the workbench, and the area where the workbench is located is transformed to a fixed position in the pixel coordinate system through affine transformation.

Figure2 Work plane distortion correction

2. Behavioral cloning

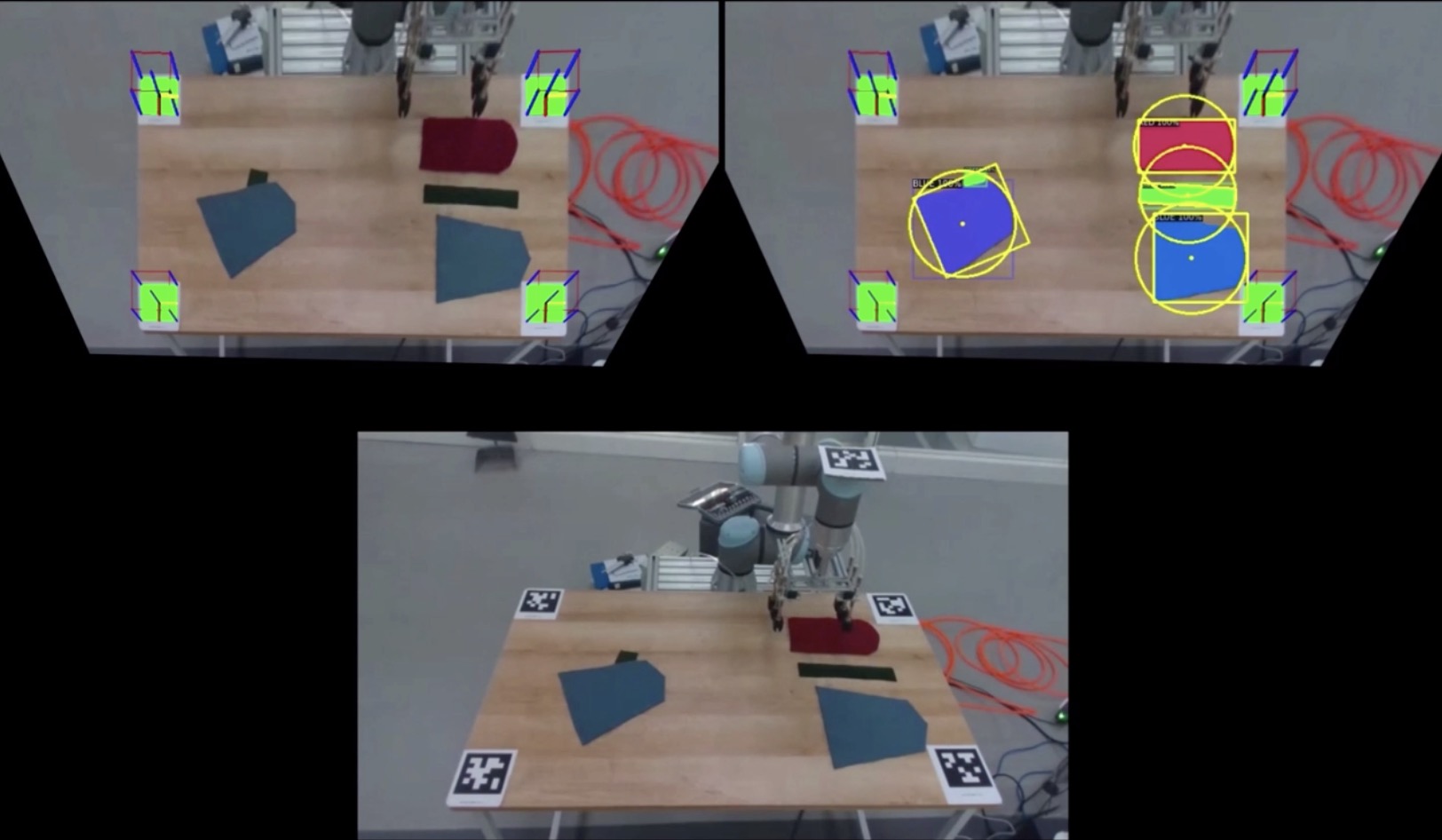

In this part, the instance segmentation algorithm obtains the instance range of the cloth randomly placed on the workbench, and outputs its center point, size and orientation by solving the minimum bounding box of the instance mask, and encodes the human to the cloth placed on the workbench in the time series. Impact. Then send the code to the robotic arm to complete the reproduction of human actions.

Figure 3 Fabric palletizing skills learning

3. Fabric palletizing skills learning

In order to enable the robotic arm to learn cloth palletizing skills, this article uses a deep reinforcement learning algorithm to build a robotic arm’s cloth palletizing skills learning framework, adopts end-to-end control methods, and transforms image input into actions at the end of the robotic arm to obtain the robotic arm. Fabric palletizing capacity.

Figure 4 Fabric palletizing skills learning

4. Cloud platform deployment

The data flow of this job covers four links: camera data collection, image entity segmentation/reinforcement learning action imitation, image entity pose transformation, and robotic arm control. Among them, the deep learning prediction model, pose transformation and mechanical control are all completed in the cloud. The distributed memory database is used as the message bus between various services in the cloud to complete the service distribution function.

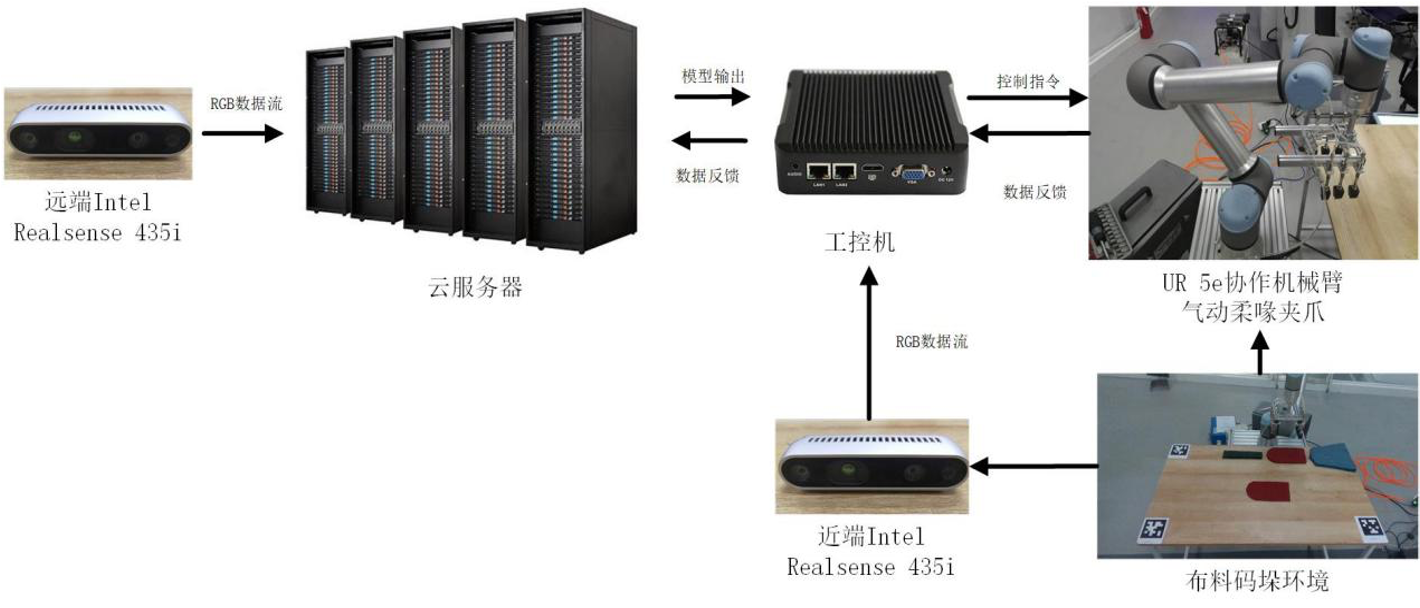

Hardware composition

The hardware part designed in this paper is composed of five parts: camera, cloud platform server, robotic arm control computer, UR5e collaborative robotic arm, pneumatic soft beak gripper, the function of each component of the system and the data flow are shown in Figure 2.

Figure 5. Overall hardware composition;

The near-end camera captures the image and inputs it to the robotic arm control industrial computer, performs affine transformation and uploads the data to the cloud platform for instance segmentation. Then download the instance targets that the robotic arm needs to grasp to the robotic arm control industrial computer, and use the deep reinforcement learning algorithm to complete the grasp and release of the robotic arm.

The remote camera obtains the operator's actions, uploads them to the cloud platform server, performs instance segmentation and behavior cloning, and transmits information such as the position, orientation, and size of the captured target to the robotic industrial computer.