3D Semantic Scene Map

Based on the previous project, in order to be able to capture more details in the environment and output the semantic relationship between objects, so that the robot can understand the concept of "there is a notebook on the table".

We proceed to the system It has been upgraded to provide a front-end perception interface for the active service and the analysis of the behavior of the head of the household.

This project uses VoxBlox++ as the baseline for semantic mapping and uses the latest semantic segmentation algorithm Yolact++ to replace MaskRCNN used by VoxBlox++. And upgrade the depth segmentation algorithm, deploy the algorithm on the GPU, and calculate in parallel with Yolact++.This project increased the inference speed of VoxBlox++ from 2HZ to 15HZ.

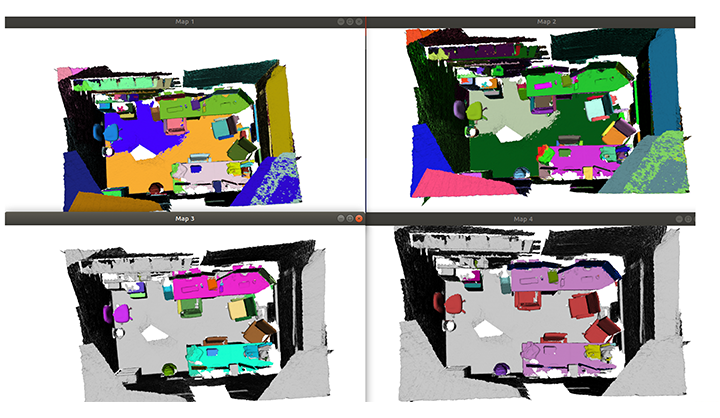

While the improved version of VoxBlox++ is running, we will use the ROS octree service to generate a two-dimensional raster map.

And each object in the semantic map is represented by a three-dimensional bounding box and projected onto a two-dimensional grid map.

Finally, we nest the scene graph algorithm into VoxBlox++. During the operation of VoxBlox++, the feature encoding fused with Yolcat++ and deep segmentation is used as the input of Factorizable Net to establish more precise semantic connections between objects and connect them using positional prepositions. (Being studied)

In this way, the real scene map is output and the robot can understand the room layout.